|

Dormant Projects Completed Projects |

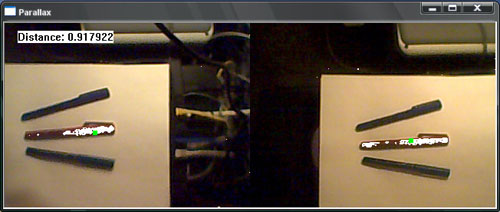

Robot Depth PerceptionFor my digital design class, my partners and I decided to make a robotic vision program that uses two webcams to estimate the distance to an object of a certain color. We chose red, but this can be changed in the source code). In order to use the program, you must arrange two webcams 14 centimeters apart with their lines of sight exactly parallel. If you are using two identical webcams, you will probably have to plug them into separate USB hubs on your computer (not two ports on the same hub). When you run the program, Windows will display a dialog box because it is not sure which inputs to use. You should expand the drop-down box and select whichever camera was not originally selected. Then the camera feeds should appear and you will see green dots indicating the location that is being locked by each camera. Clicking on the video window will toggle the display between three modes. The first is standard video, the second shows only pixels of the specified color, and the third shows the video with an overlay of white wherever the specified color is found. You can use the up and down arrow keys to control the tolerance for how close the pixels must be to the specified color. Pressing the down key results in fewer pixels passing the cuts.

The AlgorithmFirst, each image is processed to select out only the specified color. Each pixel is treated individually; its color is mapped to a vector in color-space, where the x, y, and z components of the vector are given by the red, green, and blue values. If the length of the vector is below a certain threshold, then the color is considered black. If it is above this threshold, then the vector is normalized to unit length. This minimizes problems arising from variations in brightness. The specified colors is also normalized in the same fashion. Then the program calculates the distance in color space between the normalized pixel color and the normalized target color. If this distance is shorter than the length specified by the tolerance, then the pixel is kept, otherwise it is converted to white. After filtering for the proper color, the center of mass of the remaining pixels is calculated in an attempt to find the center of the object. If there are multiple objects of the target color in the field of view, then the center of mass will not properly lock onto any object, instead it will be somewhere between them. The green dots show the location of the calculated center of mass. Note that this is not the center of mass of the actual object, but the center of mass of the pixels that passed the cuts, assuming that each pixel has unit mass. Finally, with the two center of mass pixel locations, we can estimate the distance using the parallax formula d = sL/(2*|p1-p2|*tan(phi/2)) where s is the separation between the eyes, L is the number of pixels in the width of the image, phi is the spread angle of the camera, and p1 and p2 are the horizontal pixel coordinates of the centers of mass in the two images. LimitationsThe algorithm doesn't work that well under low lighting and the objects need to be entirely within the field of view of both cameras to get reasonable estimates. It is also somewhat difficult to get two camera aligned exactly right, so the error in the estimated distance will get larger as the object gets farther from the cameras. In the next version of this program, I would like to do a complete disparity map where each pixel is painted with a color based on the distance to the object at that location. |